논문링크 : https://arxiv.org/abs/2306.14824

Kosmos-2: Grounding Multimodal Large Language Models to the World

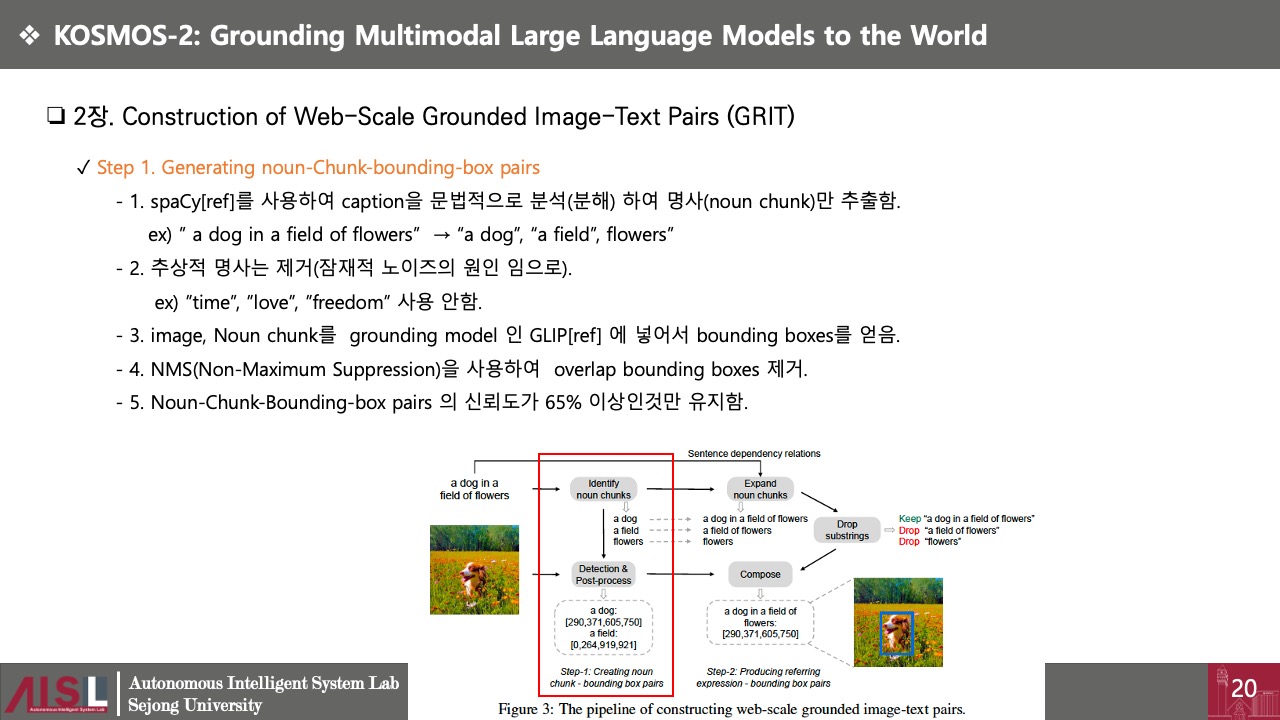

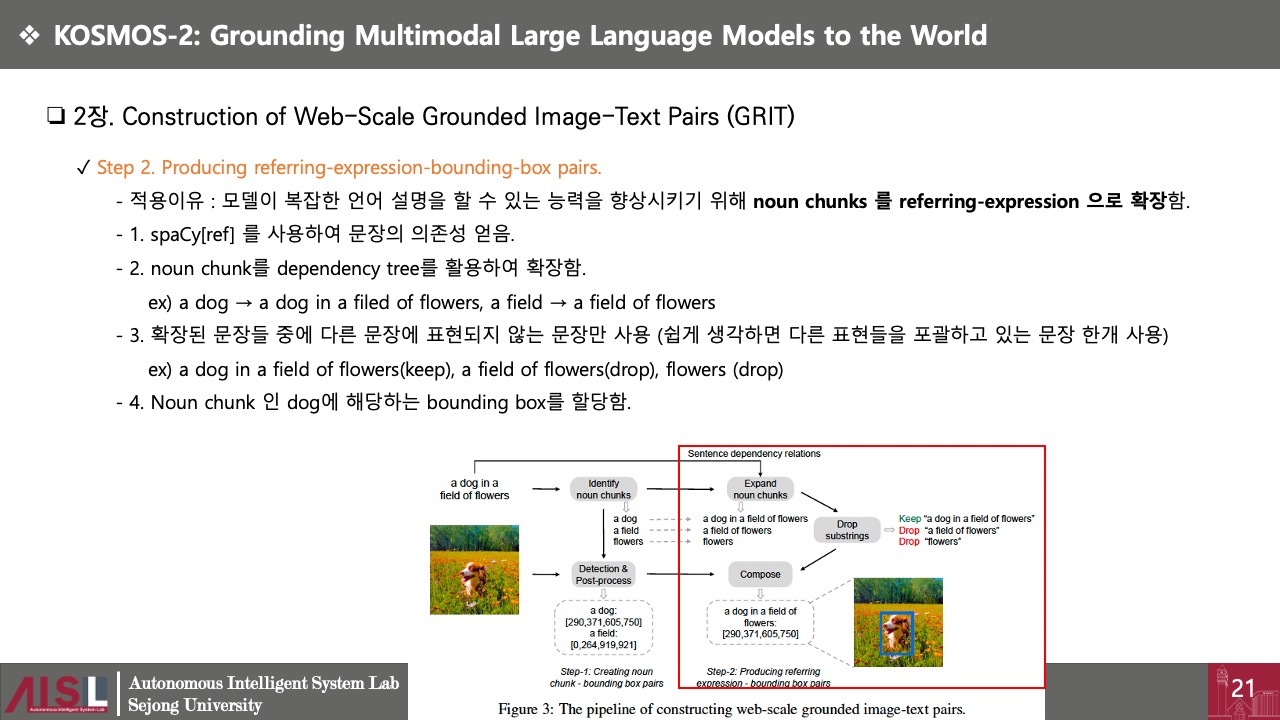

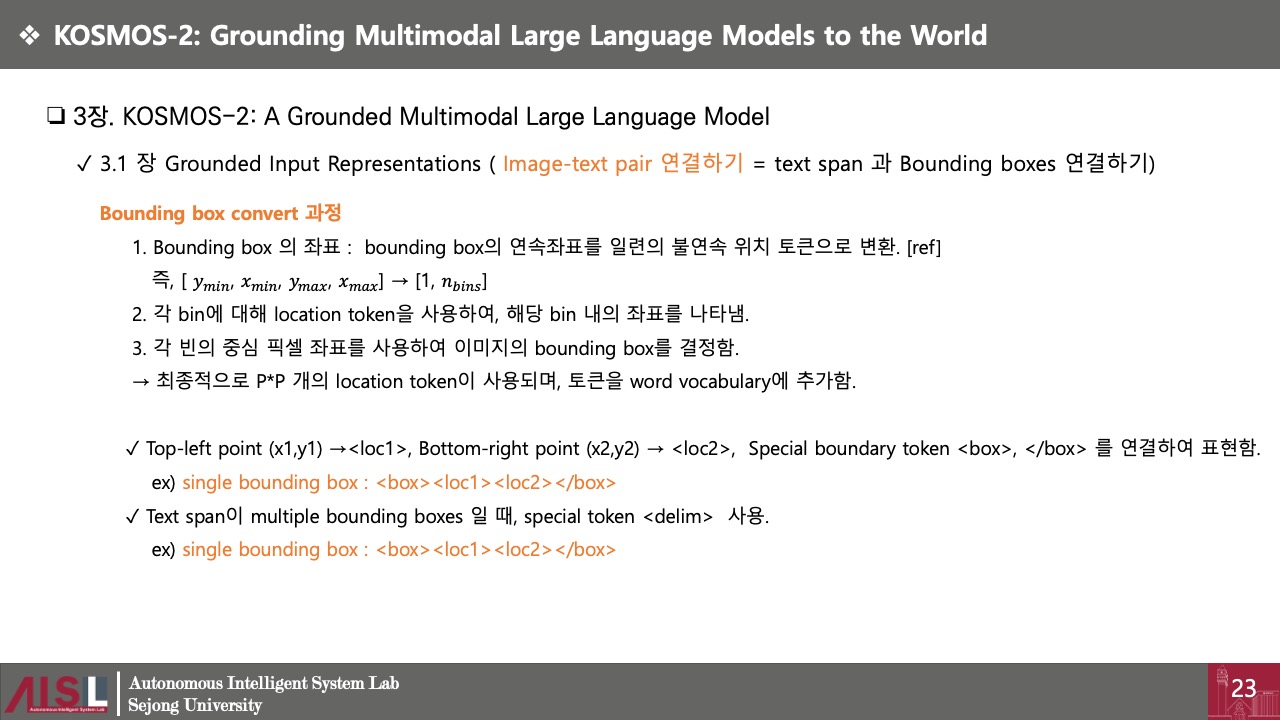

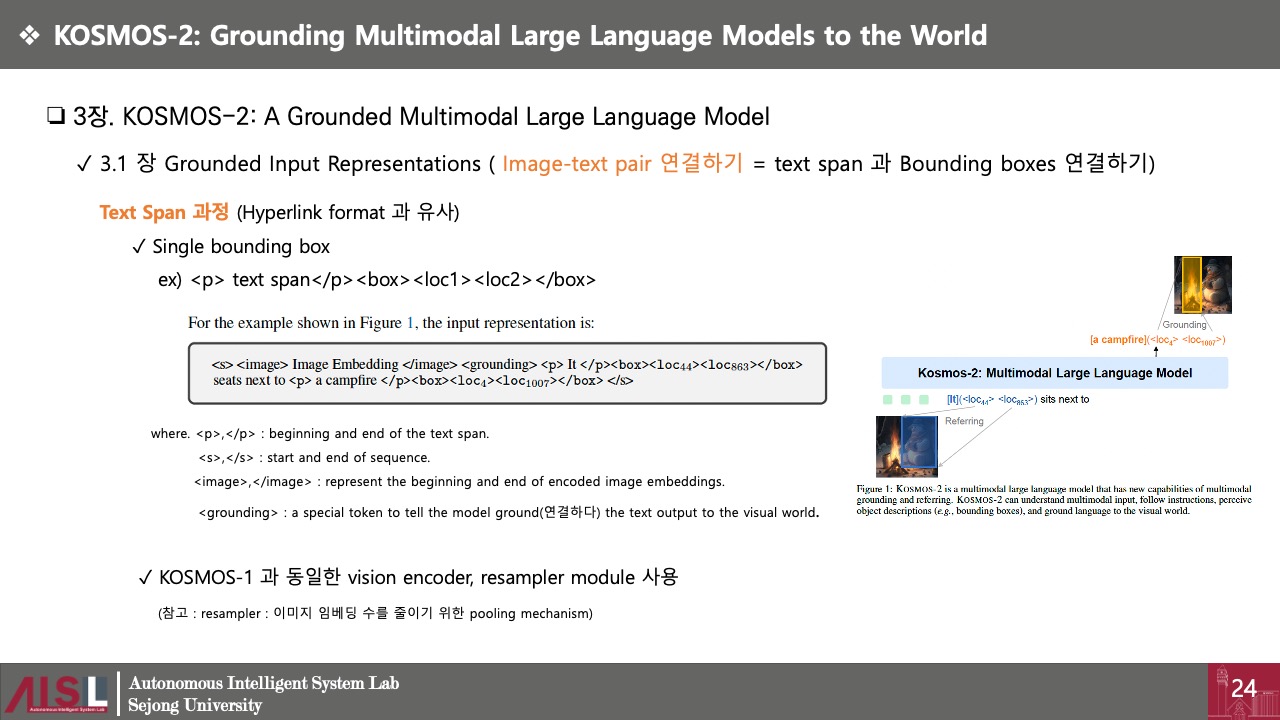

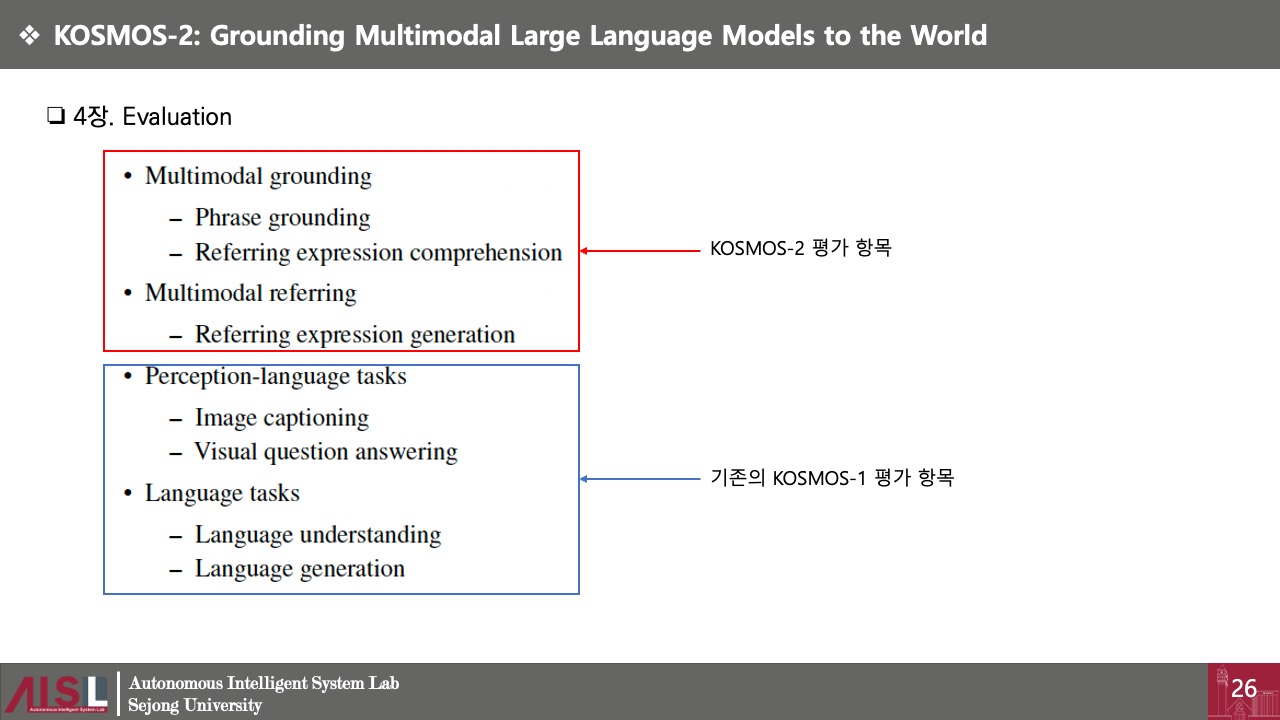

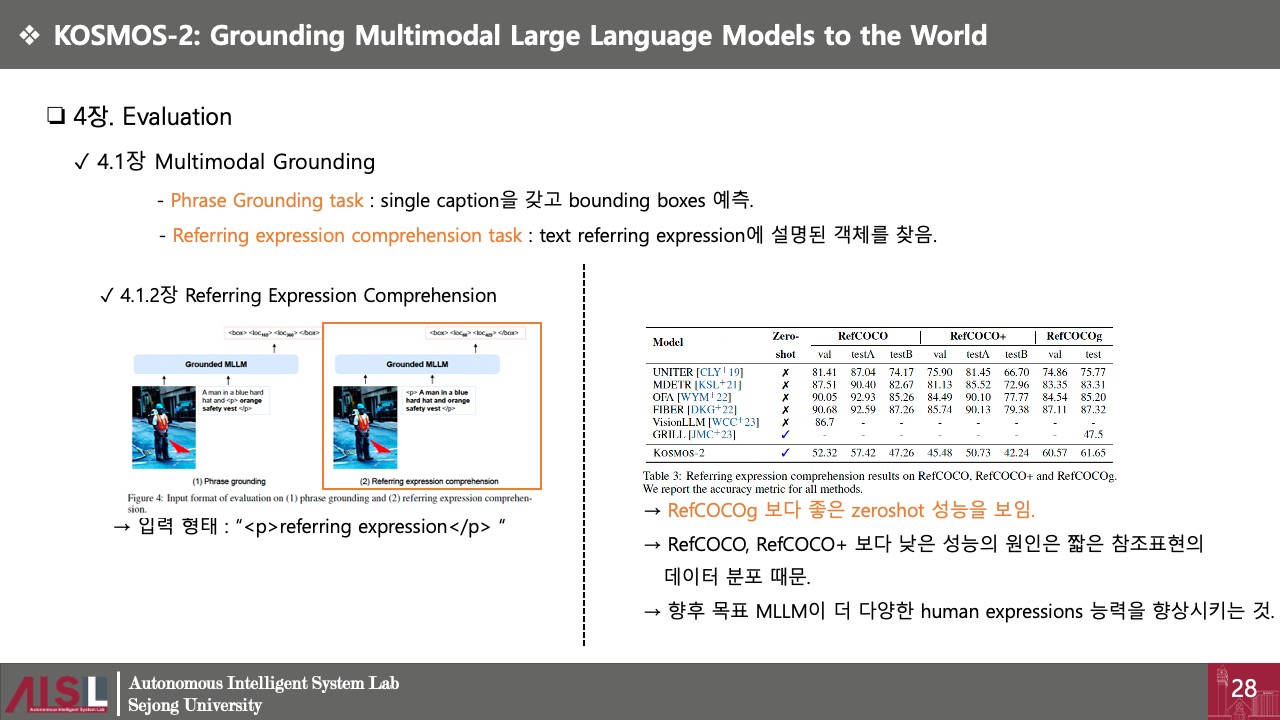

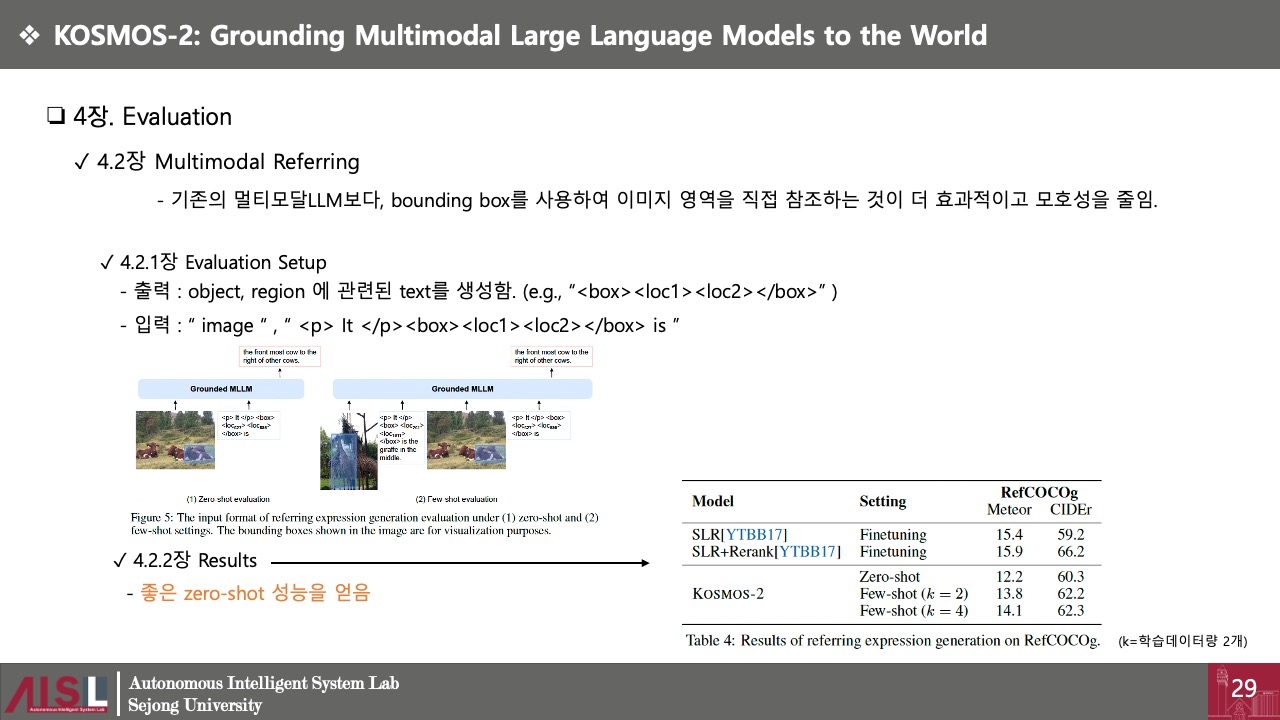

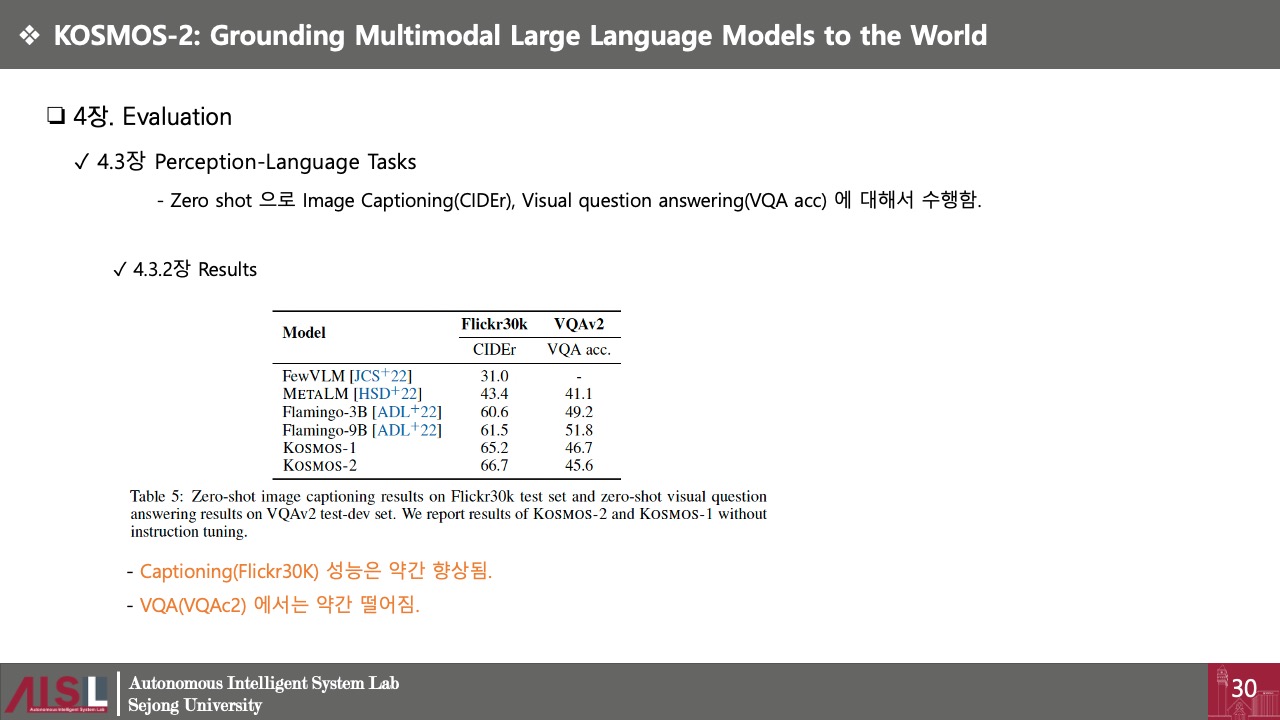

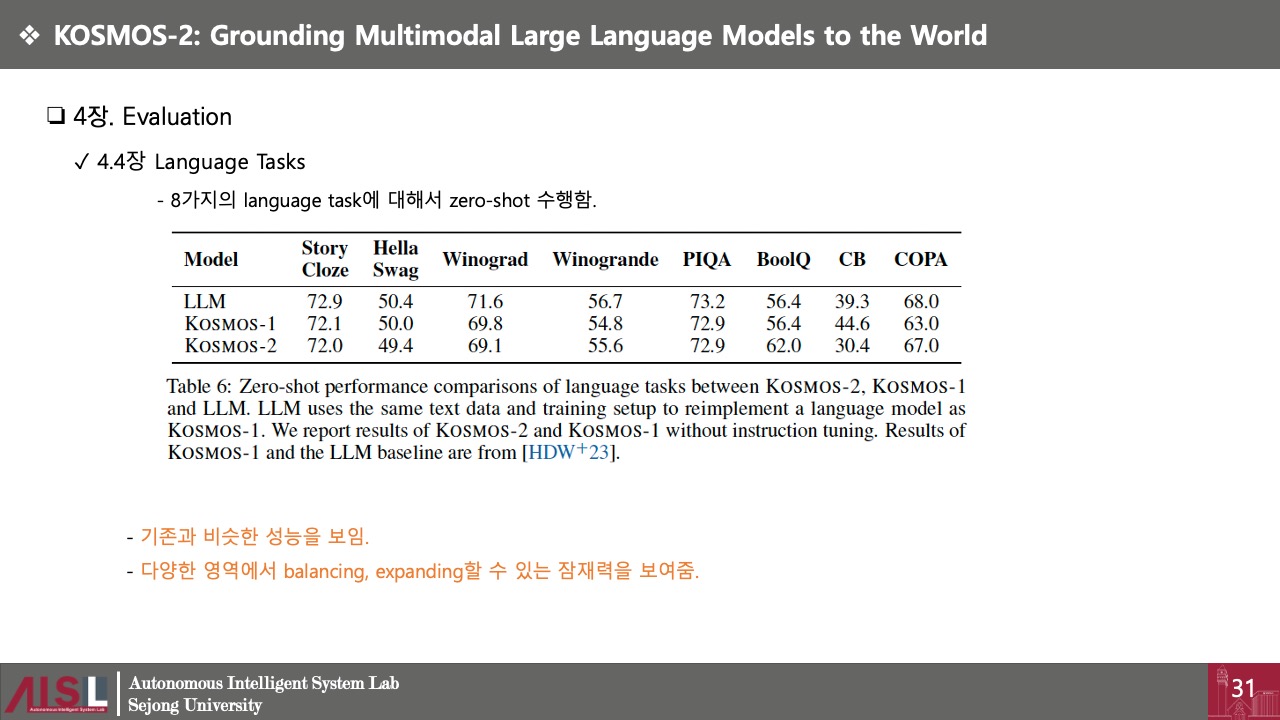

We introduce Kosmos-2, a Multimodal Large Language Model (MLLM), enabling new capabilities of perceiving object descriptions (e.g., bounding boxes) and grounding text to the visual world. Specifically, we represent refer expressions as links in Markdown, i

arxiv.org

Published : 2023.07 (arXiv)

Citation : 132회 (24.02.09기준)